InfiniBench

A Benchmark for Large Multi-Modal Models

in Long-Form Movies & TV

Shows

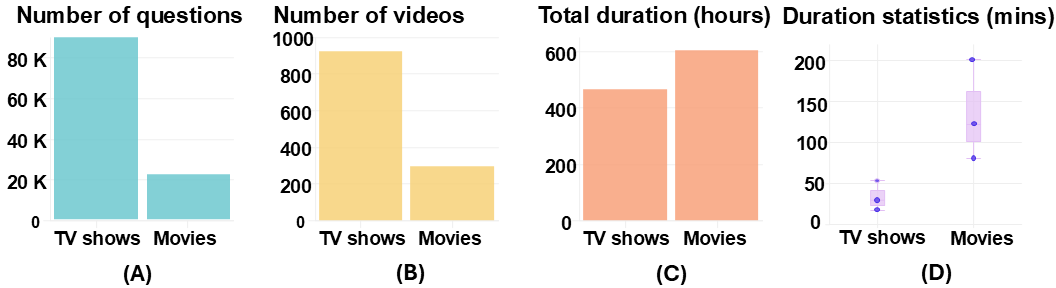

Rigorously evaluating the capabilities of multimodal models across 8 key skills with over 1,000 hours of video content

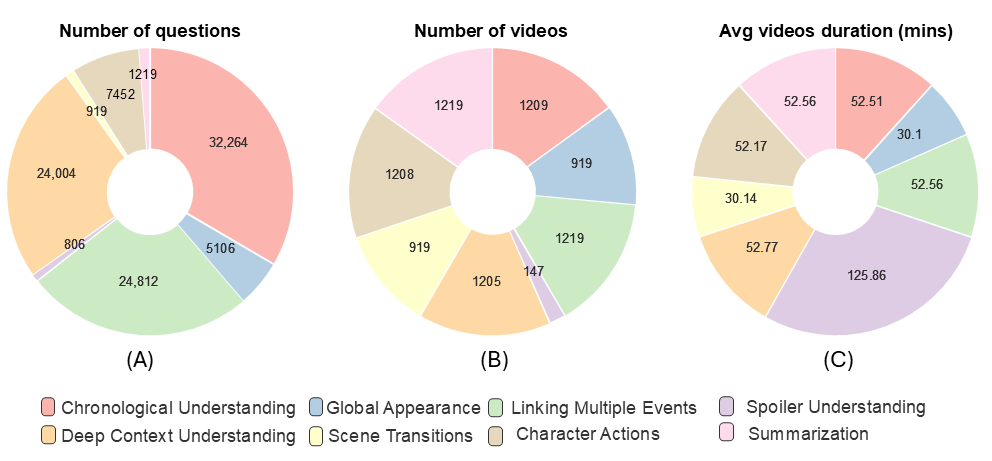

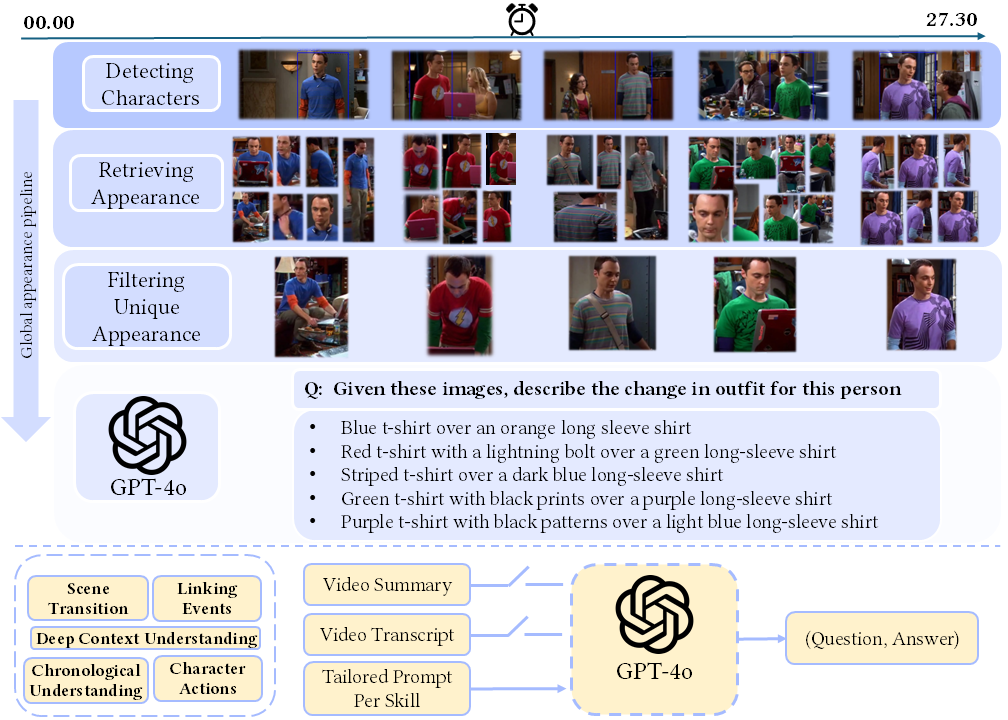

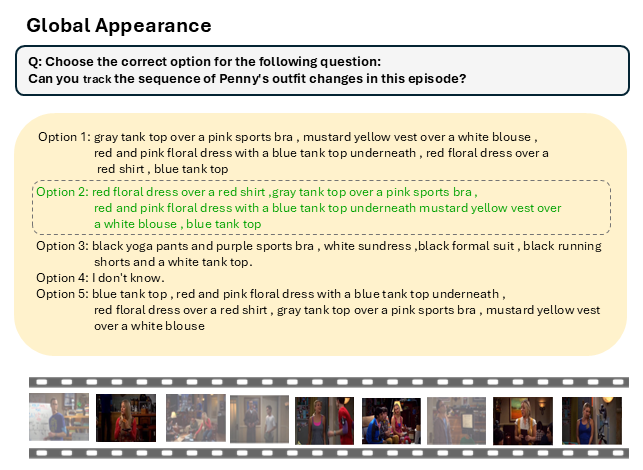

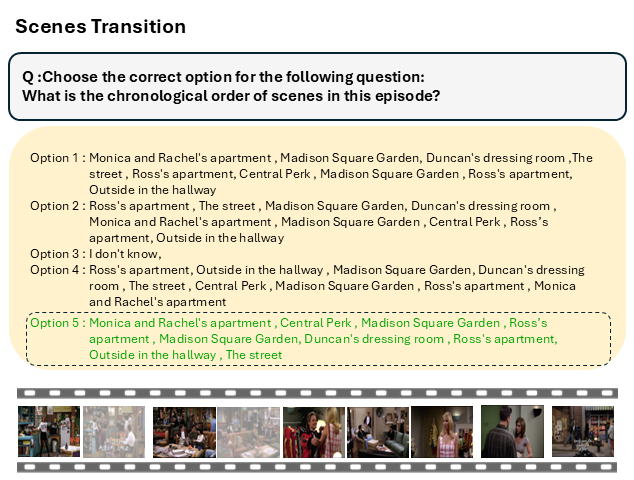

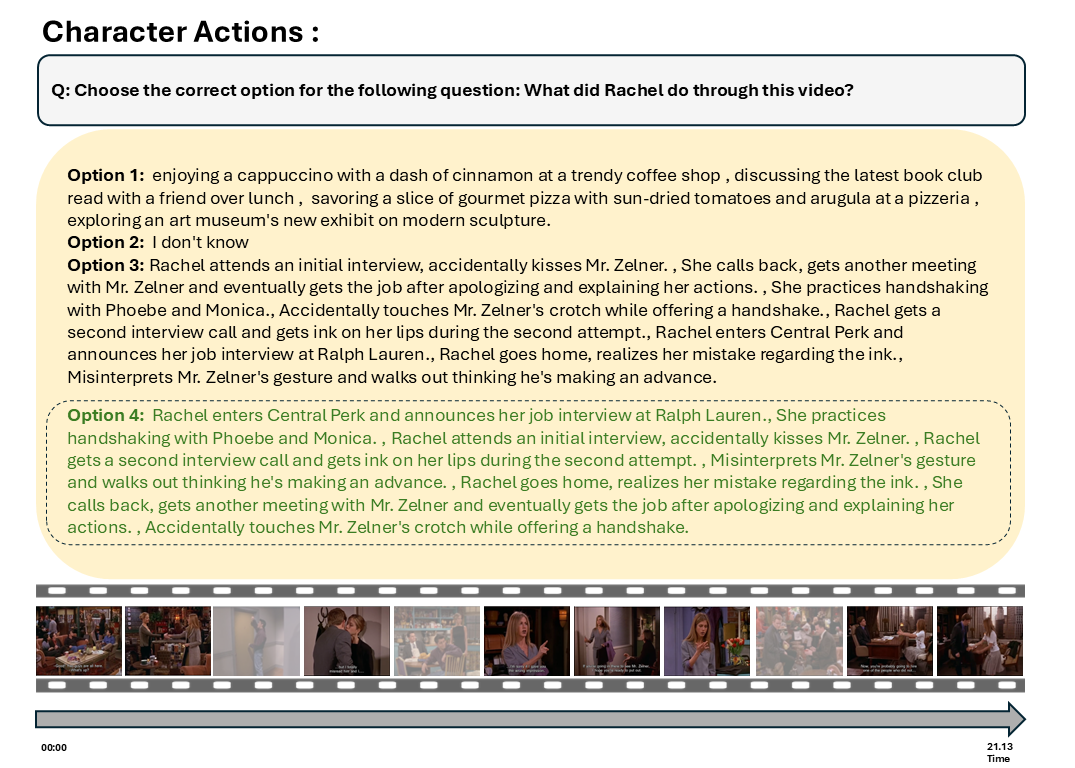

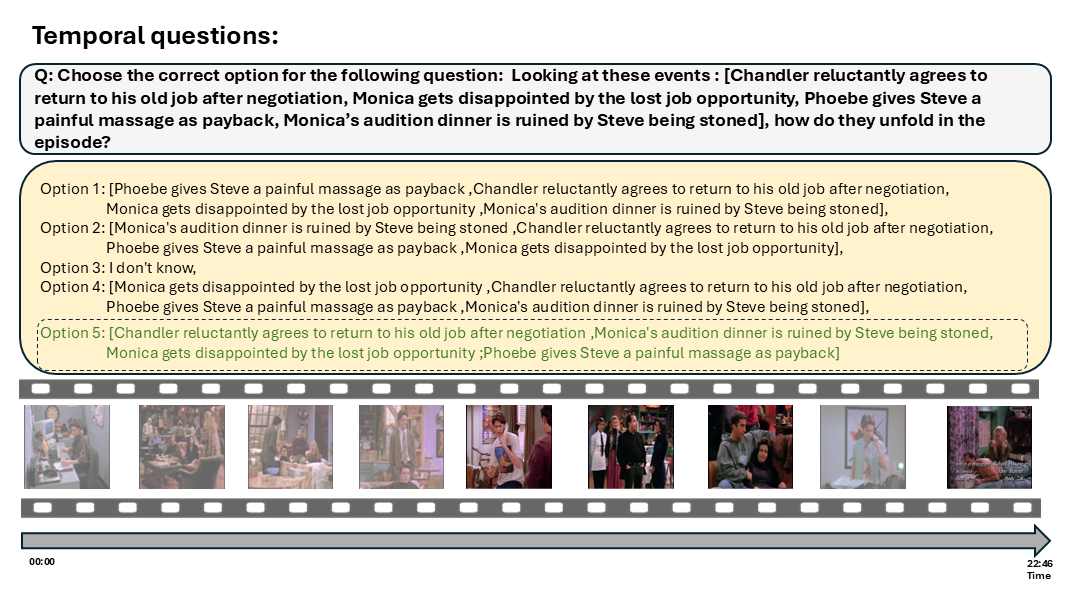

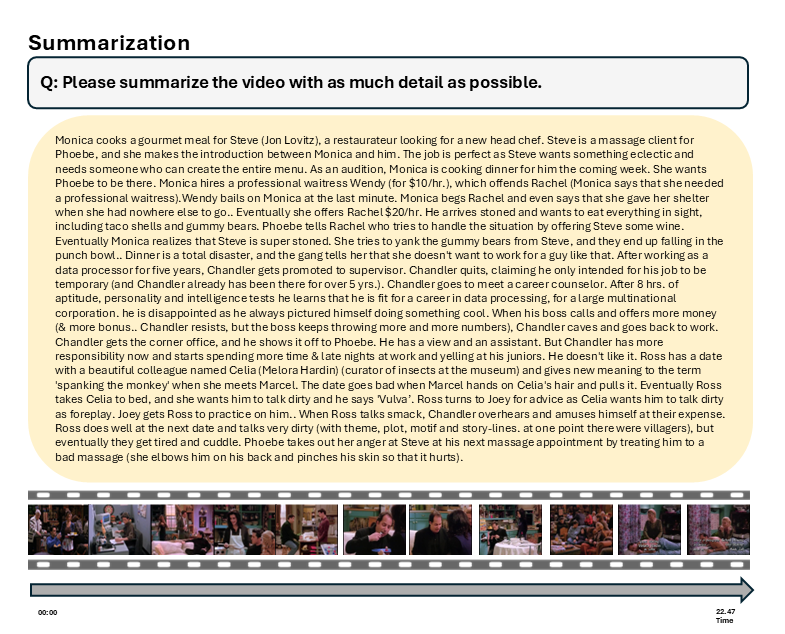

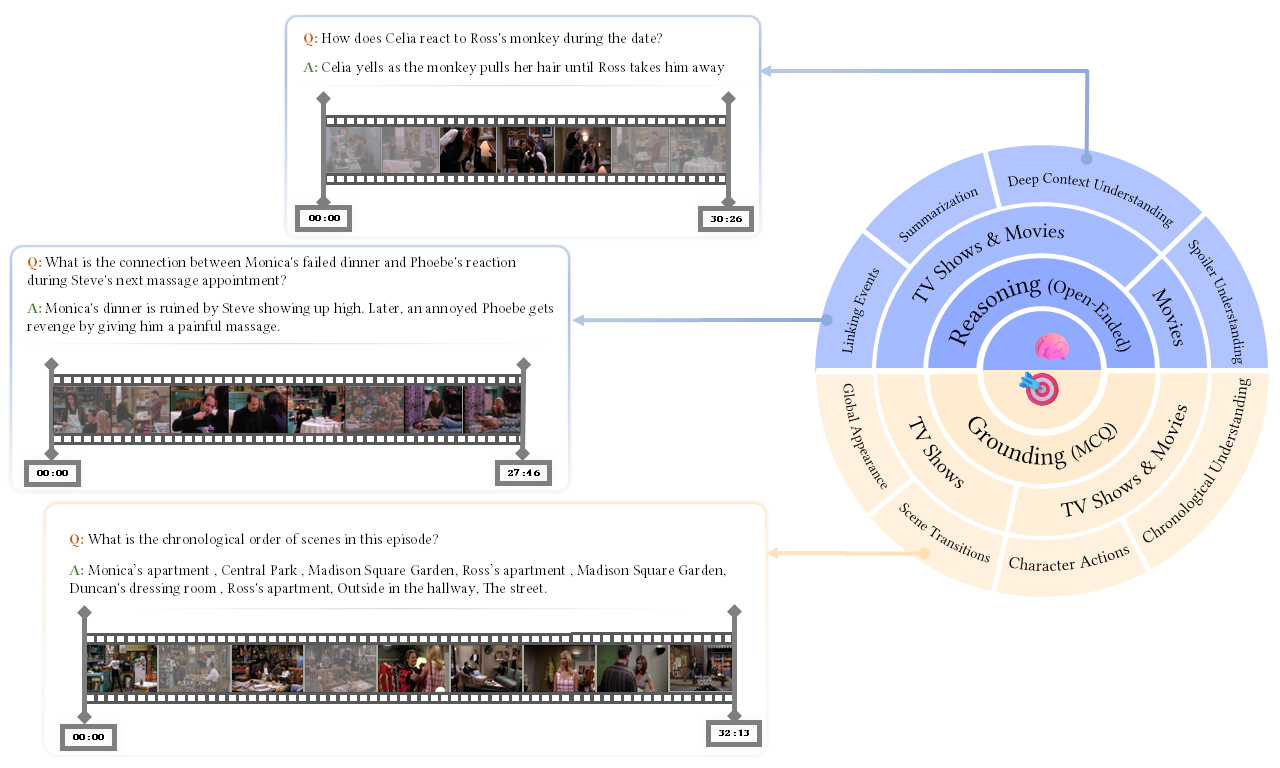

InfiniBench skill set comprising eight skills. The right side represents skill categories and question types, while the left side provides examples of both multiple-choice (MCQ) and open-ended questions.